About Connascence

Published by Manuel Rivero on 20/01/2017

Lately at Codesai we’ve been studying and applying the concept of connascence in our code and even have done an introductory talk about it. We’d like this post to be the first of a series of posts about connascence.

1. Origin.

The concept of connascence is not new at all. Meilir Page-Jones introduced it in 1992 in his paper Comparing Techniques by Means of Encapsulation and Connascence. Later, he elaborated more on the idea of connascence in his What every programmer should know about object-oriented design book from 1995, and its more modern version (same book but using UML) Fundamentals of Object-Oriented Design in UML from 1999.

Ten years later, Jim Weirich, brought connascence back from oblivion in a series of talks: Grand Unified Theory of Software Design, The Building Blocks of Modularity and Connascence Examined. As we’ll see later in this post, he did not only bring connascence back to live, but also improved its exposition.

More recently, Kevin Rutherford, wrote a very interesting series of posts, in which he talked about using connascence as a guide to choose the most effective refactorings and about how connascence can be a more objective and useful tool than code smells to identify design problems[1].

2. What is connascence?

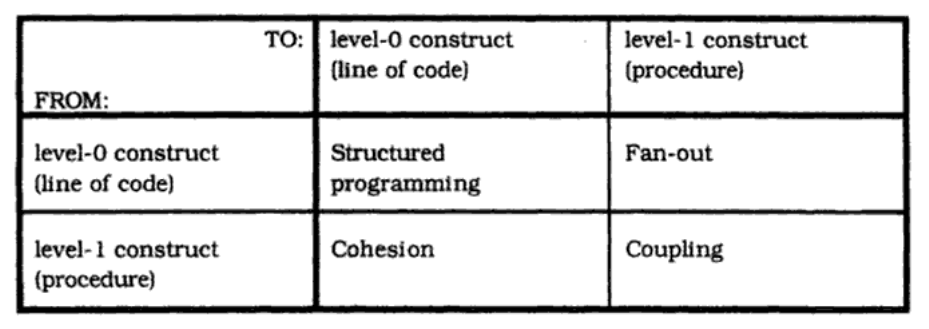

The concept of connascence appeared in a time, early nineties, when OO was starting its path to become the dominant programming paradigm, as a general way to evaluate design decisions in an OO design. In the previous dominant paradigm, structured programming, fan-out, coupling and cohesion were fundamental design criteria used to evaluate design decisions. To make clear what Page-Jones understood by these terms, let’s see the definitions he used:

Fan-out is a measure of the number of references to other procedures by lines of code within a given procedure.

Coupling is a measure of the number and strength of connections between procedures.

Cohesion is a measure of the “single-mindedness” of the lines of code within a given procedure in meeting the purpose of that procedure.

According to Page-Jones, these design criteria govern the interactions between the levels of encapsulation that are present in structured programming: level-1 encapsulation (the subroutine) and level-0 (lines of code), as can be seen in the following table from Fundamentals of Object-Oriented Design in UML.

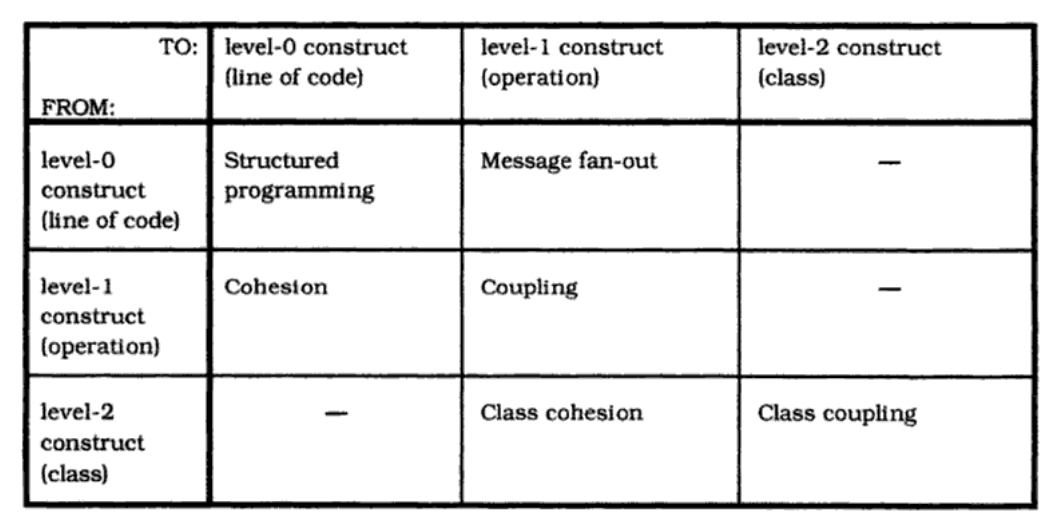

However, OO introduces at least level-2 encapsulation, (the class), which encapsulates level-1 constructs (methods) together with attributes. This introduces many new interdependencies among encapsulation levels, which will require new design criteria to be defined, (see the following table from Fundamentals of Object-Oriented Design in UML).

Two of these new design criteria are class cohesion and class coupling, which are analogue to the structured programing’s procedure cohesion and procedure coupling, but, as you can see, there are other ones in the table for which there isn’t even a name.

Connascence is meant to be a deeper criterion behind all of them and, as such, it is a general way to evaluate design decisions in an OO design. This is the formal definition of connascence by Page-Jones:

Connascence between two software elements A and B means either

that you can postulate some change to A that would require B to be changed (or at least carefully checked) in order to preserve overall correctness, or

that you can postulate some change that would require both A and B to be changed together in order to preserve overall correctness.

In other words, there is connascence between two software elements when they must change together in order for the software to keep working correctly.

We can see how this new design criteria can be used for any of the interdependencies among encapsulation levels present in OO. Moreover, it can also be used for higher levels of encapsulation (packages, modules, components, bounded contexts, etc). In fact, according to Page-Jones, connascence is applicable to any design paradigm with partitioning, encapsulation and visibility rules[2].

3. Forms of connascence.

Page-Jones distinguishes several forms (or types) of connascence.

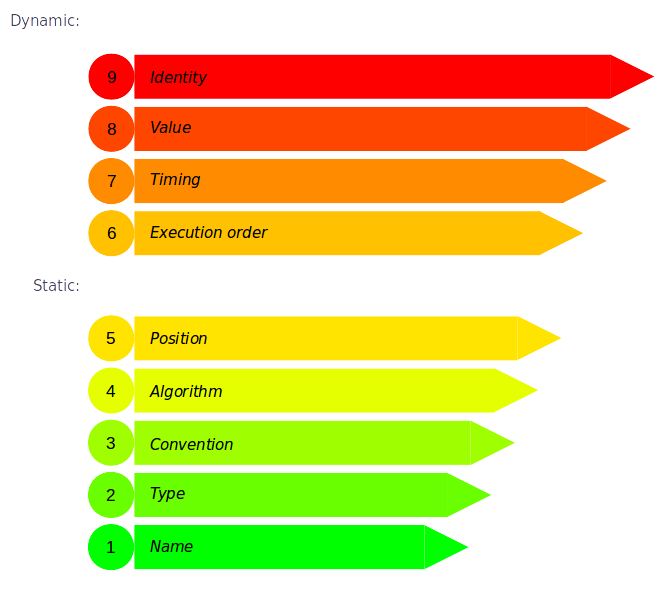

Connascence can be static, when it can be assessed from the lexical structure of the code, or dynamic, when it depends on the execution patterns of the code at run-time.

There are several types of static connascence:

-

Connascence of Name (CoN): when multiple components must agree on the name of an entity.

-

Connascence of Type (CoT): when multiple components must agree on the type of an entity.

-

Connascence of Meaning (CoM) or Connascence of Convention (CoC): when multiple components must agree on the meaning of specific values.

-

Connascence of Position (CoP): when multiple components must agree on the order of values.

-

Connascence of Algorithm (CoA): when multiple components must agree on a particular algorithm.

There are also several types of dynamic connascence:

-

Connascence of Execution (order) (CoE): when the order of execution of multiple components is important.

-

Connascence of Timing (CoTm): when the timing of the execution of multiple components is important.

-

Connascence of Value (CoV): when there are constraints on the possible values some shared elements can take. It’s usually related to invariants.

-

Connascence of Identity (CoI): when multiple components must reference the entity.

Another important form of connascence is contranascence which exists when elements are required to differ from each other (e.g., have different name in the same namespace or be in different namespaces, etc). Contranascence may also be either static or a dynamic.

4. Properties of connascence.

Page-Jones talks about two important properties of connascence that help measure its impact on maintanability:

- Degree of explicitness: the more explicit a connascence form is, the weaker it is.

- Locality: connascence across encapsulation boundaries is much worse than connascence between elements inside the same encapsulation boundary.

A nice way to reformulate this is using what it’s called the three axes of connascence[3]:

4.1. Degree.

The degree of an instance of connascence is related to the size of its impact. For instance, a software element that is connascent with hundreds of elements is likely to become a larger problem than one that is connascent to only a few.

4.2 Locality.

The locality of an instance of connascence talks about how close the two software elements are to each other. Elements that are close together (in the same encapsulation boundary) should typically present more, and higher forms of connascence than elements that are far apart (in different encapsulation boundaries). In other words, as the distance between software elements increases, the forms of connascence should be weaker.

4.3 Stregth.

Page-Jones states that connascence has a spectrum of explicitness. The more implicit a form of connascence is, the more time consuming and costly it is to detect. Also a stronger form of connascence is usually harder to refactor. Following this reasoning, we have that stronger forms of connascence are harder to detect and/or refactor. This is why static forms of connascence are weaker (easier to detect) than the dynamic ones, or, for example, why CoN is much weaker (easier to refactor) than CoP.

The following figure by Kevin Rutherford shows the different forms of connascence we saw before, but sorted by descending strength.

5. Connascence, design principles and refactoring.

Connascence is simpler than other design principles, such as, the SOLID principles, Law of Demeter, etc. In fact, it can be used to see those principles in a different light, as they can be seen using more fundamental principles like the ones in the first chapter of Kent Beck’s Implementation Patterns book.

We use code smells, which are a collection of code quality antipatterns, to guide our refactorings and improve our design, but, according to Kevin Rutherford, they are not the ideal tool for this task[4]. Sometimes connascence might be a better metric to reason about coupling than the somewhat fuzzy concept of code smells.

Connascence gives us a more precise vocabulary to talk and reason about coupling and cohesion[5], and thus helps us to better judge our designs in terms of coupling and cohesion, and decide how to improve them. In words of Gregory Brown, “this allows us to be much more specific about the problems we’re dealing with, which makes it it easier to reason about the types of refactorings that can be used to weaken the connascence between components”.

It provides a classification of forms of coupling in a system, and even better, a scale of the relative strength of the coupling each form of connascence generates. It’s precisely that scale of relative strengths what makes connascence a much better guide for refactoring. As Kevin Rutherford says:

"because it classifies the relative strength of that coupling, connascence can be used as a tool to help prioritize what should be refactored first"

Connascence explains why doing a given refactoring is a good idea.

6. How should we apply connascence?

Page-Jones offers three guidelines for using connascence to improve systems maintanability:

-

Minimize overall connascence by breaking the system into encapsulated elements.

-

Minimize any remaining connascence that crosses encapsulation boundaries.

-

Maximize the connascence within encapsulation boundaries.

According to Kevin Rutherford, the first two points conforms what he calls the Page-Jones refactoring algorithm[6].

These guidelines generalize the structured design ideals of low coupling and high cohesion and is applicable to OO, or, as it was said before, to any other paradigm with partitioning, encapsulation and visibility rules.

They might still be a little subjective, so some of us, prefer a more concrete way to apply connascence using, Jim Weirich’s two principles or rules:

Rule of Degree[7]: Convert strong forms of connascence into weaker forms of connascence.

Rule of Locality: As the distance between software elements increases, use weaker forms of connascence.

7. What’s next?

In future posts, we’ll see examples of concrete forms of conasscence relating them with design principles, code smells, and refactorings that might improve the design.

Footnotes:

References.

Books.

- Fundamentals of Object-Oriented Design in UML, Meilir Page-Jones

- What every programmer should know about object-oriented design, Meilir Page-Jones

- Structured Design: Fundamentals of a Discipline of Computer Program and Systems Design by Edward Yourdon and Larry L. Constantine

Papers.

Talks.

- The Building Blocks of Modularity, Jim Weirich (slides)

- Grand Unified Theory of Software Design, Jim Weirich (slides)

- Connascence Examined, Jim Weirich

- Connascence Examined (newer version, it goes into considerable detail of the various types of connascence), Jim Weirich (slides)

- Understanding Coupling and Cohesion hangout, Corey Haines, Curtis Cooley, Dale Emery, J. B. Rainsberger, Jim Weirich, Kent Beck, Nat Pryce, Ron Jeffries.

- Red, Green, … now what ?!, Kevin Rutherford (slides)

- Connascence, Fran Reyes y Alfredo Casado (slides)

- Connascence: How to Measure Coupling, Nick Hodges

Posts.

- Connascence as a Software Design Metric, by Gregory Brown.

- The problem with code smells, Kevin Rutherford

- A problem with Primitive Obsession, Kevin Rutherford

- The Page-Jones refactoring algorithm, Kevin Rutherford

- Connascence – Some examples, Mark Needham

Others.

Originally published in Manuel Rivero's blog.